Welcome Letter

January 22, 2024

When are we bringing in more AI?

That’s the question factory workers in Kentucky recently had for management. They had helped their kids with homework using ChatGPT – now they wanted to deploy the technology in their work lives.

But, as many firms have found, it’s not so simple.

“Just putting generative AI in the workplace is not going to change things,” said Mark Gorenberg, Chair of the MIT Corporation and founder of the first venture capital fund dedicated to AI.

“It’s changing the workflow to accommodate how generative AI works with humans that is really where the huge benefit could be.”

The challenge going forward, according to Gorenberg, “is how we can reskill at scale.”

In conversations with more than 40 business leaders across industries beginning to deploy Generative AI, we have been finding that we’re at a moment of great experimentation across a variety of domains – from technology development to work reorganization and success metrics.

Many of these experiments are happening behind closed doors within complex organizations, hidden from public view. And yet, the lessons of what works – and what doesn’t – and how to deploy these powerful tools most responsibly are important to organizations, workers, consumers, and society at large.

At MIT, where scholars and students have been developing and studying AI tools for decades, we see this as a moment for collaboration. That’s why we’ve convened leading companies, policymakers, and non-profits as part of MIT’s Working Group on Generative AI and the Work of the Future – to facilitate knowledge sharing and research on best practices about the ways these new technologies are reshaping work.

In this monthly newsletter, driven by MIT graduate students, we will feature our latest findings, insights from students and faculty, and voices from members of the working group on the frontlines.

We invite you to engage in the working group by responding to the newsletter’s open questions, participating in our quarterly meetings, or sharing your experience working with generative AI at workofthefuture@mit.edu.

Sincerely,

Working Group Co-Leads

Ben Armstrong

Kate Kellogg

Julie Shah

January 2024 Working Group Meeting: Key Takeaways

The first quarterly meeting of the MIT Working Group took place on January 10. We hosted brief talks by industry leaders on Generative AI investment and implementation, followed by small group discussions about technical best practices, training and re-skilling, innovation, and identifying generative AI use cases. Here is the agenda along with some top takeaways:

Agenda

Introduction: Ben Armstrong, MIT

Investing in AI: Mark Gorenberg, Chair of the MIT Corporation & Managing Director, Zetta Venture Partners

Governing Large Language Models: Kent Walker, President of Global Affairs at Google and Alphabet

Generative AI in the Field: Michael Klein, Sr. Director of Architecture, Data Science, and Machine Learning at Liberty Mutual

Transforming HR with Generative AI: Jon Lester, VP, HR Technology, Data & AI at IBM

Breakout Groups (introduced by Kate Kellogg, MIT)

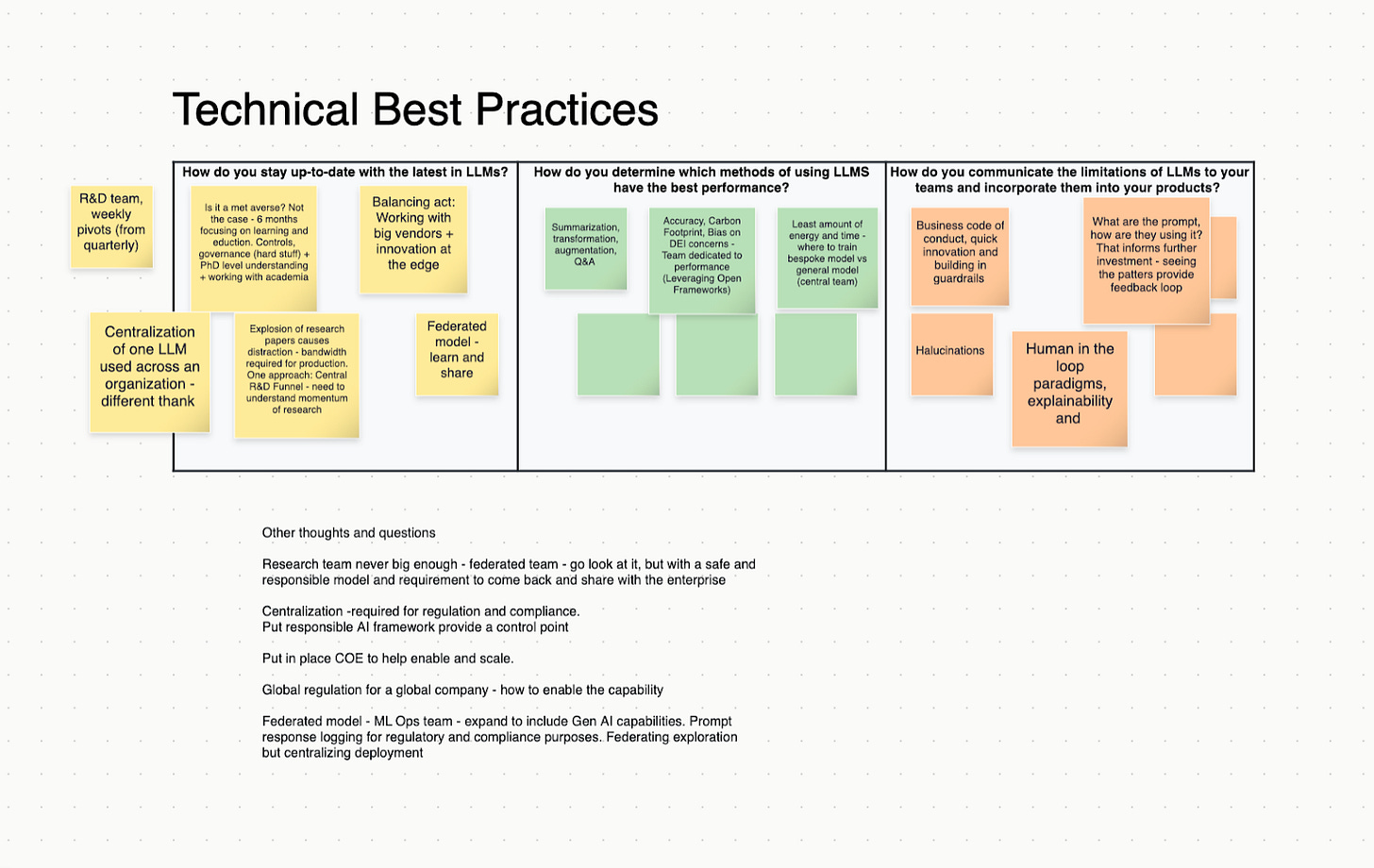

Technical best practices: How do you determine which methods of using LLMs have the best performance?

Identifying high-impact use cases: How have you determined which use cases to prioritize?

Innovating with Generative AI: How has your process of innovation changed with using LLM-based tools?

Training and Re-skilling: How has the integration of AI tools changed your demand for skills?

Summary Discussion moderated by Julie Shah, MIT

Discussions follow Chatham House Rules: to promote knowledge sharing, participants are free to use the information in the meeting, but we ask that they not identify the name or affiliation of the speaker without their permission.

Notes

Measuring unprecedented growth

Generative AI has had explosive growth, emerging as a 25-billion-dollar industry in the last three years. ChatGPT had the fastest market adoption of any technology in history: 100 million users in 2 months, 300 million in 6 months. Meanwhile, model size has grown from 62 million parameters (ImageNet 10 years ago) to an estimated 1.4 trillion parameters in GPT-4.

One distinguishing feature of this technology is its inversion of the human-machine relationship. Machines have always done quality assurance for human-produced material but now, the human’s role is resembling quality assurance for machine-produced material. Based on the MIT Tech Review, 89% of employees say it will help them do more work. 88% say it will help them do higher quality work.

“Ideas are easy, execution is hard.”

There are some key challenges in implementing generative AI at scale, including calibrating trust, building regulation, transitioning the workforce, and ensuring population-wide benefits.

We see this in practice at a US-based company adopting LLMs at an enterprise scale. Noticing employees using ChatGPT outside the company firewall, they recognized the need for a centralized task force to identify business cases and establish success metrics. They began experimenting with various generative AI vendors and open source models. Eventually, they rolled out a flexible core tool that would serve as an internal GPT chatbot / knowledge center – but with a catch.

They asked users to complete a 7-minute training that highlighted how users should engage with the tool. They needed to keep a human in the loop: the user, not the LLM, has the final say. They have now scaled to 7,000 internal users with 1,000 daily active users, 60% of whom are non-technical business units. The business departments and a Responsible AI committee constantly evaluate whether the usages are generating value and aligned with the company’s position on responsible AI.

The Re-skilling Opportunity

As companies begin to integrate LLM tools into their workflows, they have focused on supporting new skills for their workers who are using these tools. One approach is to reimagine workers as human-LLM centaurs, with generative AI employees allowing humans to focus on tasks where their capabilities are best suited, and LLMs to take the lead on tasks where they can excel. The role of employers is to not only enable but manage and support employees through the transition. Doing it well means promoting a learning culture and creating a user experience that attracts adoption. One benefit of democratizing the use of Generative AI is empowering an employee to reinvent their own role.

However, there are two potential limitations of this approach. One is that some employees might not be equipped to reinvent their role on their own – and could even resist it. Moreover, it might not be immediately clear where humans can outperform LLMs, and vice versa. Recent research from our own Kate Kellogg and her collaborators highlights this “jagged frontier” where LLMs are highly effective at some tasks, but can drag users down in others.

Breakout Groups

Technical Best Practices

Members discussed strategies to stay up-to-date with the latest in LLMs. Some reported having a team monitoring research and balancing enterprise usability while others allow federated experimentation of different models with a centralized reporting system. In assessing LLM performance, members expressed concerns about accuracy, the carbon footprint, DEI, and generalized/bespoke capabilities.

There was a discussion around how best to communicate the limitations of LLMs to employees. The main theme was that having a human-in-the-loop is vital. Members discussed the benefits of centralized control points for regulation, compliance, and prioritization of market needs — and the challenges of coupling this with a federated experimentation environment. More detailed notes can be found here.

High-Impact Use Cases

Prioritizing and assessing use cases is an exercise in balancing the risks and rewards of a particular application. Members introduced a scorecard-based approach that aimed to measure the complexity of an application, along with its potential business value. Several members discussed having a central organization evaluating use cases that solicited feedback from decentralized teams within an organization. More detailed notes can be found here.

Innovation with Generative AI

Participants saw the potential for LLMs to enable faster problem solving, but noted the need to make parallel investments into guardrails to ensure quality decision-making. Some companies are using LLMs to find patterns and opportunities for innovation, combining them with more traditional quantitative AI models for R&D. LLMs have also become a queryable knowledge base that frees up time and enables high-value workers to focus on innovation. More detailed notes can be found here.

Training and Re-skilling

A growth mindset, both on the part of leadership and employees, was highlighted as the most important skill for navigating this new era. Employees across all levels must adapt as new roles are materializing such as conversational specialists and designers while older roles keep transforming. Prompt engineering is an important new skill for every knowledge worker (not just those who may have that title). Participants argued for treating LLMs as more than search engines, while ensuring that human experts remain the arbiters of truth. To this end, participants saw a need to educate users on the capabilities of LLMs to calibrate trust and ensure responsible adoption. A peer-to-peer showcase of positive outcomes has been valuable for some companies in shifting the attitude around LLMs. More detailed notes can be found here.

Ask Us Anything

We’ve been talking to all sorts of companies about their early use cases of generative AI and their plans for deploying these technologies. In this and future newsletters, we will work through some of the questions that we hear from companies. If you’d like to pose a question for us to answer in the newsletter, please write to us at [workofthefuture@mit.edu] with the subject line “Ask MIT.” As a sample question, we pose the following:

“My company wants to build its own LLM. Where should we start?”

Given the immense computation and human resources required to successfully “build” a Large Language Model from scratch, it is imperative to evaluate whether creating something from scratch is truly necessary for your business needs. Depending on the model size, building a new LLM from scratch can cost anywhere from millions to hundreds of millions. For most use cases we have come across in our research thus far (broadly, knowledge base management, summarization, and ideation), fine-tuning and methods such as Retrieval Augmented Generation (RAG) are adequate for targeting a particular domain. Even for businesses with data privacy concerns about using public LLMs such as ChatGPT, there are open-source options such as Falcon and Llama.

If your company still wants to embark on this journey, the first step is data. Data curation is arguably the most important part of the process to ensure quality output. The Internet (barring copyright issues), public datasets, private datasets (your company archives), and LLM-generated text are all good sources; simply put, the kinds of training data used will inform what kinds of tasks your LLM is good at. Non-private datasets will then likely require de-duplication and quality filtering.

The next step is choosing your model architecture, involving various design choices such as transformer type, activation functions, positional encoding, size, and much more.

The last two steps are training and evaluation. Training a model with hundreds of billions of parameters is quite time consuming and requires additional training optimization techniques such as 3D parallelism, mixed precision training, and many more.

Model evaluation is quite important for recognizing the model’s performance in the use case for which it was trained. Benchmarking on recognized benchmarks such as ARC and MMLU are a good starting point but domain-specific benchmarks are also important to develop. Finally, even with a domain-specific LLM, prompt engineering and fine-tuning are valuable techniques for providing additional context and improving accuracy.

— Sabiyyah Ali, MIT Work of the Future Fellow

MIT Voices: Meet the Work of the Future Fellows

In this first newsletter, we’ll introduce the working group’s multi-talented fellows by sharing what most excites and concerns them about generative AI.

Sabiyyah Ali (Graduate Fellow)

A) Most exciting

In recent months, I’ve witnessed my coworkers often opting to “GPT” their questions rather than “Google” them. I see LLMs as a faster, more convenient way to obtain information from the Internet than combing through websites and articles. The information synthesis and retrieval capabilities of LLMs have been shockingly good, even for niche topics. I am hopeful of the potential for LLMs in federated learning and bridging the Digital Divide.

B) Top concern

LLMs have been likened to a hammer for which we’re all trying to find a nail. David Rolnick, a Computer Scientist at McGill University, says, “the primary impact of a hammer is what is being hammered, not what is in the hammer.” I am concerned that this powerful hammer might also further interests not aligned with our human values. My doomsday scenario is seeing AI investment and development set us back in climate change, the wage gap, intellectual fraud, dissemination of misinformation, and much more. I believe AI policy has an enormous and important task ahead.

Carey Goldberg (Senior Fellow)

A) Most exciting

After many an AI winter and summer, the field has recently crossed into something mind-blowing, in a turn of events so sudden that even longtime experts say they’re shocked. Now comes the slower process of figuring out all the ways to optimize the use of generative AI, even as the technology keeps improving, and while there will be many disappointments and frustrations, I do expect the change to be dramatic – and unpredictable, but potentially very positive, particularly in health care and education. It’s intelligence that sets humans apart – and now we will have much more of it.

B) Top concern

What most scares me is the unknown unknowns. Top computer scientists admit they have no inkling about most of how large language models work, and yet keep marching forward with new models. Microsoft researcher Sebastien Bubeck says that generative AI “has randomized the future” – no one can confidently predict what comes next. When it comes to the workplace, I do think it’s reasonable to expect huge job displacement in the not-too-distant future.

Shakked Noy (Graduate Fellow)

A) Most exciting

I am most excited about the potential for generative AI to automate tedious, uninteresting subcomponents of professional work like data cleaning, basic coding, writing generic text, or managing files. This would free up professionals’ time to focus on the more interesting and creative aspects of their work.

B) Top concern

I am concerned that regulators and the public will be unable to properly oversee and regulate generative AI, either because advances in the technology happen too quickly for regulators to keep up or because regulation becomes a politically polarized issue.

Prerna Ravi (Graduate Fellow)

A) Most exciting

What I love about generative AI is that it opens up new opportunities for creativity and self-expression. Generative AI can assist and augment human creativity in fields like writing, art, and music. It can help generate new ideas, provide inspiration, and even facilitate collaboration with other humans to produce innovative solutions to their problems. This could streamline workflows, reduce costs, and enable more efficient content production.

B) Top concern

What concerns me most is the lack of general awareness surrounding the inner workings of these systems. AI literacy initiatives could help users articulate how bias propagates through datasets that underpin generative AI models. They can also help foster collaborative efforts from researchers, developers, policymakers, and society at large.

Lea Tejedor (Graduate Fellow)

A) Most exciting

There are so many ways to use technology to create, research, and explore. For many people, it's only possible to use computers in a way that's been packaged into software by someone else. Generative AI lowers the barrier to entry to use computers outside of the boundaries of existing software.

B) Top concern

Generative AI gives bad actors more reach and resources than they had before.

Azfar Sulaiman (Graduate Fellow)

A) Most exciting:

I’m really fascinated with how generative AI will empower–and is empowering– us to reimagine our work, skills and creativity. Some of the previously time and cost-consuming tasks have been reformed, allowing more time for creative problem solving and decision making. It truly feels like a blank canvas of ever-growing possibilities.

B) Top concern:

Generative AI is a revolutionary tool meant to assist our work and decision-making, yet there is a risk that these tools might propagate systemic societal biases. Over-reliance on generative AI and withdrawal of critical evaluation while using these tools and their outputs is analogous to letting go of the steering wheel. I believe we must be vigilant and responsible in our ongoing engagement with generative AI.

Felix Wang (Graduate Fellow)

A) Most exciting

I am most excited about the democratization aspect of generative AI in the sense that most prior technology breakthroughs require infrastructure or domain expertise for someone to use it while LLMs can be used by anyone anywhere as long as there is an Internet connection. Moreover, the more that the public interacts with LLMs, the more data we have to make LLMs better at various tasks.

B) Top concern

As LLMs draw on more and more data, we are potentially producing some form of intelligence (maybe not AGI yet, but potentially autonomous enough, e.g. Ghost in the Shell-type entities) that can act independently and in a way not that is not aligned with human interests. International cooperation/policies might be necessary to create guardrails to rein in the corporate arms race to produce even better LLMs without accidentally starting another AI winter that spreads AI pessimism and hinders research progress.

Whitney Zhang (Graduate Fellow)

A) Most exciting

I am most excited about the vastness of the possibilities with generative AI and how much interest there has been across domains. It has the potential to improve worker productivity, spur innovation, and make knowledge and skills more accessible.

B) Top concern

I am most concerned that this potential will be unrealized, either due to limitations of the technology itself or due to challenges of adoption. There are many challenges, including finding use cases, getting buy-in from stakeholders, and overcoming privacy and security hurdles. An unfortunate world would be one in which generative AI’s use cases are limited to that of a “so-so technology” that reduces costs to firms by replacing workers, but does not provide true increased productivity or benefits to all.

Recent Advances

The LLM landscape shifts constantly, with competing vendors introducing new models, features, and tools almost daily. One goal of this newsletter is to highlight noteworthy recent advances, like these:

LLMs as an (unreliable) Co-Worker

We’ve seen some people using LLMs almost as a coworker, something to bounce ideas off and problem solve with. Although decision making may be one of the most beneficial use cases, it comes with risks. A human brainstorming partner has logical reasoning, idea evaluation, and justification capabilities that have not yet been fully replicated in LLMs according to recent findings. A recent paper from Google DeepMind examines the efficacy of self-correction within LLMs, or in other words, correcting its outputs without any meaningful external feedback from the user. This would be the equivalent of asking your coworker, “Are you sure about that?” The experiments reveal that LLMs cannot reliably evaluate the accuracy of their outputs, sometimes even changing correct outputs to incorrect ones when asked to self-evaluate (more commonly in common-sense tasks).

Another natural feature of a human discussion is disagreement through which one party convinces the other to reach, hopefully, a correct consensus. To evaluate this capability in LLMs, a recent paper conducts experiments where it engages in a debate-like dialogue between the LLM and the user. Although through absurd examples, the experiments demonstrate that through user disagreement, LLMs can be misled into believing in and affirming falsehoods even for problems with well-defined truth such as math problems. As we move towards using LLMs as a problem solving partner, we must be careful of their existing limitations in reasoning.

A Case Study in Healthcare

A major area of AI investment is healthcare. Google DeepMind has recently developed AMIE, an experimental LLM for conversational differential diagnosis. Diagnostic AI has enormous potential for increasing consistency, accessibility, and quality of care, especially in remote regions. While there are intangible benefits of a real life physician-patient dialogue, AMIE impressively demonstrated greater diagnostic accuracy and superior performance on 28 of 32 axes in text-based consultations, as compared to PCPs, according to specialist physicians. The LLM was trained on both real-world clinician conversations and simulated data.

Further research in this area is undoubtedly important but there are concerns with the methods of data collection used for training such LLMs. In a recent case study, researchers studied the potential ramifications of voice data collection during health consultations from the perspective of clinicians. It highlights eight prospective risks for both patients and clinicians including self-censorship, obstruction to care services, legal risks, workflow disruptions, and privacy. Given health care is such a high-stakes domain, special attention must be paid to not only the impact of AI model deployment but also methods of data collection in these domains.

Contact Us

We welcome your feedback and suggestions for this monthly newsletter at sabi@mit.edu. Tell us what you’d like to see! We also look forward to your participation in our February 29 workshop on Responsible AI.